Blog

Atom feed is here.

This post is more like an entry in my journal that I've made public, a post about finding my way in the world of game development.

Here is the curated commit log from this last week's development.

2026-02-03

feat: removed entity action timer; moves are now turn-blocking anims.

2026-02-03

feat: render entity index in graphical turn queue debug visualization

2026-02-03

feat: allow mult. entities in turn queue to move on same frame

2026-02-03

feat: introduce reaction to entity death after attack animations play

2026-02-06

feat: add component to support entity pos interp. during movement

This also updates the camera to move with the player as position is

interpolated, having the effect of a smooth-scrolling camera instead of

a camera that moves in units of whole tiles.

This morning I was thinking about the Cassanda Complex and general mentality. Over the last few years, recent popular podcasts (which I'll just not name) have repopularized the term. Often the speakers use the term as cathartic relief, to express some kind of frustration they have over a thought that they feel mocked, attacked, or persecuted for instead of rewarded for. For a long time, I thought, "yeah, that sucks...," but I realized even this is an acceptance of self-pity. Just reframe the situation, and you have a huge win on your hands. You want to be right and early. That is where you make outsized returns. So if you know things should be done differently, do so, even if it imposes a cost--not an immediate reward--in the short term, because when the long term pans out and the world catches up, you'll be many steps ahead and ready to launch that book, deploy that technology, release that game, mentor those people, etc. Even this is a grow-the-pie winning opportunity.

I missed the update two week's ago, and last week's update is late, so this post is a double feature! Recently I am spending a lot more time editing Twitch VODS to create a YouTube dev log of sorts. This amounts to me listening to me build my own game instead of spending time building the game, and these blog posts are also me talking about working instead of "working," so I will probably start to make these updates more concise.

I will still post small videos of gameplay directly in some of these updates which will serve as quick visual checkpoints in the journey, though. I will also still write the occassional long post when I have a topic I want to discuss in more detail.

This last week I focused on implementing basic animations.

This week I imlpemented an entity-component-system, a basic player attack, entity deletion, and text and textbox rendering.

This week I implemented A* and added support for multiple enemies to the game.

Here is the commit log from last week's work.

2025-12-23

feat: introduce default allocator replacement for context

2025-12-26

feat: introduce "standard library" (sl) with ring buffer and test

2025-12-26

feat: add heap data structure and min-heap procedures to sl

2025-12-27

feat: separate min_heap out of heap impl; add max_heap; add tests

The main focus this week was pathfinding, but I was also up to some other peripheral things as well.

Firstly, we have the introduction of a default allocator replacement for the Jai

context. Jai passes around a special variable implicitly to each scope called

the context. I think I discussed this earlier, but in short, the context has

various useful properties on it such as the currently preferred logging function

and the preferred default and temporary allocators. In my case, I want to avoid

allocating using the default allocator. I also want to avoid allocating using

the temp allocator as much as possible, but I'm less concerned about this

because I clear its storage at the beginning of each frame. To catch usage of

the default allocator, I implemented my own allocator which just

assert(false, "[...]");s on any operation requested of it. I caught a few

issues with this, mostly String.join calls, that I fixed using

String.join(...,, allocator=temp);

Note the ,, which is an operator in Jai which allows you to modify context

properties at callsites for arbitrary functions. I would like to use my own

temporary allocators provided through the most basic of arenas, but for Jai

"standard library" things like this, it's easier to use Jai's temporary

allocator. I could always implement the allocator interface for my arena and

thus use my own backing memory pool, though. Probably a project for a later

time.

Toward pathfinding, I have a simple grid map with uniform cost to move between squares. I recalled the standard A* algorithm for pathfinding in such situations. It's been over 10 years since I've dealt with A*, though, so I needed a refresher. I came across Red Blob Games. This blog and the author's few others linked from it are a wealth of information on algorithms for games, particularly top-down, 2D ones such as mine. I highly recommend checking the site out if you're interested in such.

I thought I was going to use a queue, and a ring buffer would work well here,

so I implemented one. I followed a great tip from

Juho Snellman

on using only positive indicies (no forced wrap-around via %) and masking the

indicies only when you need to look into the backing storage. Clever and neat.

Now, it turns out I actually wanted a priority queue, so for that I decided to use a min heap. To my knowledge, Jai does not provide a min heap, so I implemented one of those as well. To brush up on that, I referenced the classic Introduction to Data Structures and Alrogithms book. I had learned from this in college as well. After re-reading a very small portion of this recently, I admit I cannot recommend it. It does many things well, but I find it overreaches with its advice in places and also (probably accidentally) misrepresents some concepts by overfitting descriptions on very narrow use cases in specific languages, for example by calling the heap a region of garbage-collected storage. Anyway, it did the job.

I ultimately implemented a heap (heap.jai) and then implemented the min-heap and

max-heap interfaces in respective files that both import heap.jai. I

reintroduced my "sl" concept from when I was playing with data structure

generation via the C preprocessor two or three weeks ago. I have a special file

in the sl module called test.jai which provides a main function and imports

all of the files in sl and tests their features. I have a build.jai file in sl

that builds a binary from test.jai. Building and running the tests is a manual

process, but I don't anticipate changing sl so frequently that I'll hurt for

any automation, except that one time when I will probably change it, introduce

a bug, and forget to run the tests haha.

I then got to implementing A*. The work is on a branch currently and will be the subject of this week's update.

I also started streaming livecoding (and some gaming) on Twitch, as noted in a previous post. The idea here is to maybe build even the smallest of audiences or at least presence in parallel with building the game instead of serializing the process. Presumably an audience takes just as long if not longer to build than the game itself, but what do I know--I'm totally new to this aspect!

I decided to give streaming a go. You can check out the content here. I will try streaming both development and games here and there. The motivation is building an audience more organically in the event I do end up trying to market a game.

Here is the gist of the commit log for last week's dev log, this post coming in a bit late.

2025-12-15

feat: have aseprite exporter export to build/, not data/

2025-12-15

chore: bump SDL3 to 3.2.28, SDL_image to 3.2.4

2025-12-15

chore: move aseprite exporter into subfolder; add tools/jai-sdl3 submod

2025-12-15

feat: introduce main.jai; use SDL3 bindings to open window, cap fps

2025-12-19

feat: finish rewriting in Jai; add generated SDL3_image bindings

2025-12-19

feat: parse spawn layer from map asset; randomly spawn player/enemy

2025-12-19

feat: implement Map.assign_entity_locations, consolidate asset paths

The big thing from this last week was revisiting Jai's bindings generator and

committing to Jai, not Odin. You can see on the 15th that I was again tempted by

Jai, brought in (my own fork of) the jai-sdl3 bindings generator wrapper for

SDL3, and proceeded to rewrite the game code so far in Jai.

After resting two weekends ago, I realized that I didn't give the bindings

generator a fair shake. I mentioned it had some issues, or maybe that I was just

holding it wrong. I was just holding it wrong. I wrote a simple C library,

compiled it to dynamic and static libraries, and learned how to build bindings

for these. The knowledge gained here scaled up very quickly to allow me to

understand the bindings generator applied to SDL. I tweaked the implementation

(of just the Linux-targeted portion) of the wrapper to not require a system

installation of SDL. This way I can build bindings for my copies of SDL3 that I

build from source. I also added support for SDL3_image. This was much simpler

than the SDL3 support since SDL_image.h is a comparitively small file. The

generator accepts a visitor that can inspect each declaration as it is processed

and decide what to do--generate bindings with no customization, customize the

generation, or skip the declaration. Since SDL_image.h requires SDL.h, the

bindings generator will try to (re)produce bindings for SDL3. To avoid this,

we just have the visitor ignore declarations that don't start with IMG_.

Additionally, we add a header (or footer) to the output to

#import "sdl3";

where sdl3 is the name of the module that provides the SDL3 bindings.

The most time-consuming part of the port was rethinking the memory allocators.

In the end, I kept my own arena implementation and still pass those around, but

I also had to figure out what I was going to do with Jai's implicit context

passed to each procedure, namely the context's allocators. There is a good

thread in the Jai beta discord about this. It reminds us that the main purpose

of the allocators in the context is to help control which memory is used by

modules that you didn't implement yourself. So it makes sense for me to keep

passing my own arenas around. I can also call any Jai procedure that uses

temporary storage at will since I clear Jai's temporary storage at the

beginning of each frame in my game. In fact, writing this just made me realize

I need to clear my temp arena at the beginning of each frame too, forgot to

do that...

I haven't studied the implementation of Jai's temporary allocator, but from a

cursor glance I think it allocates its backing storage on-demand and grows the

storage as it needs to. This means that unlike my arenas, the memory is not

allocated entirely up front, and doing so was the entire purpose of introducing

arenas. That can be solved, though, and I believe Jai itself provides some

allocator implementations (Pool?) that allow for up-front, fixed allocation.

Another good idea from the Discord thread was creating a replacement for the

primary allocator assigned in the context that simply calls assert(false) for

any operation requested of it. This way we can detect when we are allocating

from somewhere that we don't intend to, assuming code we call respects the

allocators set in the context. I have yet to implement this idea but will do so.

About why Jai instead of Odin or some other language, I am just attracted to the syntax and power yet simplicity, at least for the features I care most about. The language feels familiar, not like something I've been writing in for a long time (I really haven't been) but like something that works mostly how I expect it to. Like earlier Python, and like good-enough C, it fits in my head. As you can see from the commit history, I did the entire Jai port in 2, maybe 3 commits. I could have done it in many, adding this functionality and testing it and committing, that functionality and testing it and committing, etc., but I just plowed through it. Amazingly, after getting the program to compile, with the exception of one case where I passed by value instead of by pointer, everything just worked.

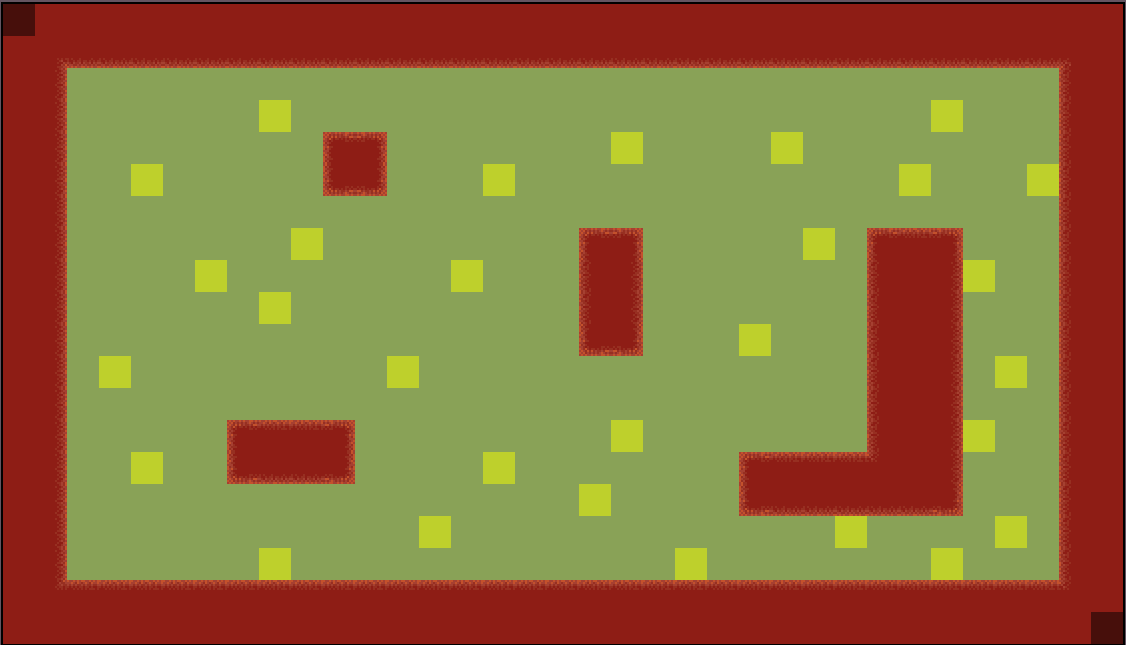

With the port to Jai done, I'm getting back to adding features and experimenting again. To close the week out, I added random entity spawning to the game. I chose to place spawn points on my test map by creating another tilemap layer in my Aseprite test map that marks tiles available for spawning. The spawn point placement in my live game was off, and then I realized that each layer in Aseprite is exported with a total size determined by a bounding box placed around the layer's top-left-most tile and bottom-right-most tile. I briefly investigated ways to force the layer to have the same dimensions as all other layers but didn't turn anything up, at least not from the capabilities available through the Lua API. So I resorted to having 3 tiles IDs in the spawn layer. The first is the no-tile ID, which is just 0. This is the default in Aseprite, so I'm more accommodating it than designing it. The second is the ID that indicates a tile is available for spawning. The third are placeholder tiles I use to make sure the spawn layer matches other layers' dimensions. The placeholder tile is black (drawn at 50% opacity) in the image below. The yellow tiles are spawn tiles, the red tiles are collision tiles (on a separate collision layer), and the light green is moveable space. Beneath the red tiles are darker green tiles which represent walls or bushes as visible in previous demo videos.

I use Jai's temporary allocator to build an array of spawn tile locations when I parse the map data, and then I iterate through the entities to randomly assign them a spawn location. I will probably re-hard-code spawn locations soon to create reproducible environments for testing other features, but then I will reenable random spawn in some fashion. The idea plays into the game concept I have in mind.